Hello again my friends!

Whether you’re just starting out on your audio journey, or if you have been doing this for a while you may have heard the terms sample rate and bit depth. They are essential to digital audio, because without either we wouldn’t have it!

Sample Rate is the number of samples per second taken from a continuous audio signal to create a digital representation of that audio signal. Bit Depth, refers to the number of bits used to represent each audio sample.

Those are textbook definitions that are 100% correct, but don’t do much to help you understand just what they are if you don’t already have an understanding of digital audio. So, I’m going in depth on some of my favorite nerdy topics. If you know nothing about digital audio, by the end of this post I’m confident you will be able to grasp the concepts of sample rate and bit depth.

Table of Contents

Understanding Audio Sample Rate and Bit Depth

Sample rate and bit depth are two fundamental aspects of digital audio that play a critical role in determining the overall quality of an audio recording. Let’s start by looking at each one in greater detail.

Sample Rate

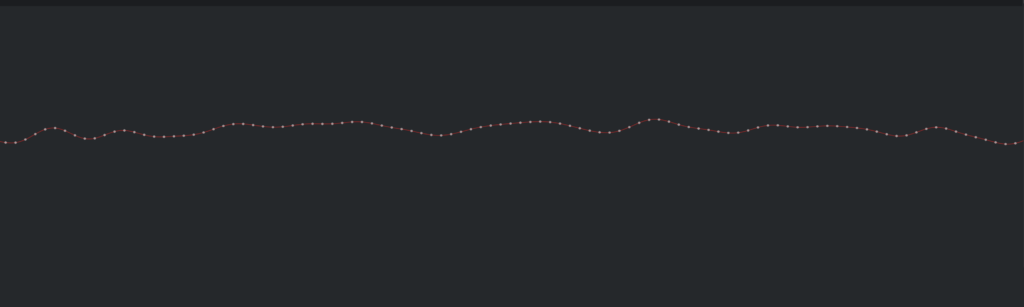

Sample rate refers to the number of samples per second taken from a continuous audio signal to create a discrete digital representation. It’s typically measured in Hertz (Hz) or kilohertz (kHz). The more samples taken per second, the higher the sample rate, and the more accurate the digital representation of the original audio signal.

The way I like to explain sample rate is like this: think about a camera recording a video. When you record a video your camera is recording at a set number of frames per second (fps). If your frame rate is 30fps, that means that the camera has taken 30 separate pictures in one second. When that is played back you have a “moving picture” or a video.

When you use a higher frame rate, you get more detail in the movement in your video. Things look more natural. A frame rate of 60fps is closer to how we actually perceive the world around us, which is why videos shot at that frame rate look more realistic, and feel much more fluid.

So, if we think of a sample rate like a frame rate, they function similarly. Sample rate is how many snapshots (pictures) is taken of an audio signal per second. If we use 44.1KHz as an example, that means that each second of audio is represented by 44,100 snapshots per second! That is A LOT of data. It is one of the reasons that CD quality audio has been the gold standard of digital audio since its inception in 1982.

Much like increasing the framerate in a camera will provide much more data for displaying a video, increasing the sample rate at recording will create a much more complete “picture” of what the original analog waveform actually is. There is much debate about whether higher sample rates are worth the increase in file size. I’m not going to go down that rabbit hole in this blog post, that is a conversation for another date!

Now that we know what sample rate is, here are the most commonly used sample rates in audio production:

- 44.1 kHz (CD quality)

- 48 kHz (often used in video and film production)

- 88.2 kHz (2x CD quality)

- 96 kHz (a popular choice for high-resolution audio)

- 192 kHz (ultra-high-resolution audio)

Bit Depth

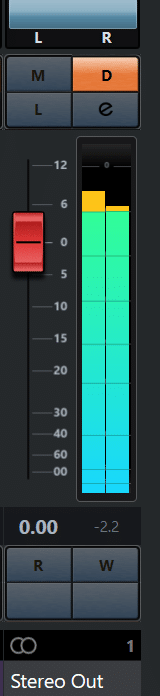

Bit depth, on the other hand, refers to the number of bits used to represent each audio sample. The higher the bit depth, the greater the dynamic range, and the more accurate the representation of the audio signal’s amplitude. Common bit depths include 16-bit (CD quality), 24-bit (used in professional recording), and 32-bit (used in some high-resolution audio formats and digital audio workstations).

Bit depth refers to the number of bits used to represent each audio sample in a digital audio file. In simpler terms, you can think of bit depth as the resolution of the audio signal. Continuing the camera analogy, a higher resolution image provides more detail and clarity. A higher bit depth offers more precision in representing the amplitude (volume) of an audio signal at any given moment.

In digital audio, the most common bit depths are 16-bit (CD quality) and 24-bit (used in professional recording). A 16-bit audio file can represent 65,536 different amplitude levels, while a 24-bit file can represent over 16 million levels.

As a result, higher bit depths offer a greater dynamic range, which means they can capture and reproduce a wider range of loud and soft sounds with more accuracy and less noise.

In recent years most DAW’s (Digital Audio Workstation) can go to 32bit and some even 64 bit. The amount of theoretical headroom at these resolutions is limitless. However, most consumer devices that playback audio currently still tend to operate at a maximum of 24bit. Audophile devices that can cost thousands of dollars do support 32bit.

There is another term you may have heard used when talking about digital audio, bit rate. Let’s take a look at what bit rate is and how it relates to sample rate and bit depth.

Bit Rate

Bit rate, refers to the amount of data used to represent one second of audio in a digital file. It’s typically measured in kilobits per second (kbps). Bit rate is closely related to both sample rate and bit depth, as it is a product of these two values. Essentially, the bit rate determines the overall size and quality of a compressed audio file.

To understand bit rate, imagine you’re trying to send an image through a slow internet connection. To make the image load faster, you could reduce its quality by compressing it. This would result in a smaller file size, but the image would likely appear less detailed and exhibit visible artifacts. In the context of digital audio, the bit rate is like the level of compression applied to the audio file. Lower bit rates result in smaller file sizes but can introduce audible artifacts and a loss of audio quality, whereas higher bit rates provide better audio quality but larger file sizes.

Bit rate is often discussed in the context of compressed audio formats, such as MP3 or AAC. In these formats, a lower bit rate (e.g., 128 kbps) will yield a smaller file size with potentially lower audio quality, while a higher bit rate (e.g., 320 kbps) will produce a larger file with better audio quality.

Bit Depth vs. Bit Rate

It’s important to remember that bit depth and bit rate are not interchangeable terms! They refer to different aspects of digital audio:

- Bit depth determines the resolution or accuracy of the amplitude representation in a digital audio file, affecting the dynamic range and noise performance.

- Bit rate refers to the amount of data used to represent one second of audio, affecting the file size and quality of compressed audio formats.

Understanding the differences between these two terms is crucial for making informed decisions about your audio projects and ensuring the highest possible audio quality.

That is a lot of info, and there is so much more! To better understand sample rate we also need to understand the Nyquist Theory.

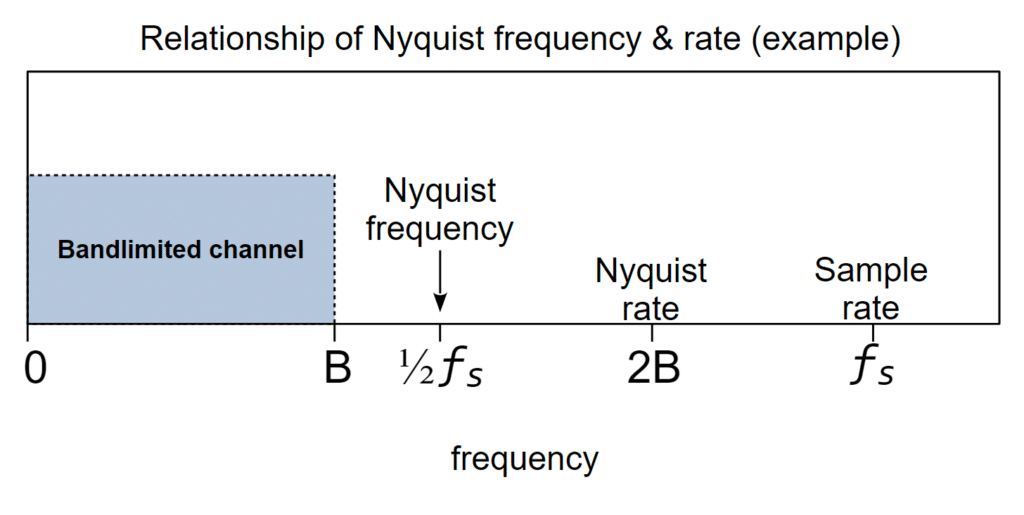

The Nyquist Theory states that to accurately reproduce an analog signal, it must be sampled at a rate of at least twice its highest frequency component.

This minimum sampling rate, known as the Nyquist Rate, ensures that all information in the original signal is captured without distortion or aliasing. Failure to meet the Nyquist Rate can result in aliasing, which is when higher frequency components are misinterpreted as lower frequencies, leading to a distorted digital representation of the original signal.

This again is a textbook definition, but the history behind this principle of digital audio is really cool! To understand its historical context and impact on the audio industry, we must first look into the life of Harry Nyquist, the man behind the theorem, and then explore how his ideas have influenced the development of digital audio. So, let’s take a small trip in history!

Harry Nyquist: The Man Behind the Theorem

Harry Nyquist was a Swedish-born American engineer and physicist who made significant contributions to the fields of communication theory and control systems. Born in 1889 in Sweden, Nyquist immigrated to the United States in 1907 and later earned his Ph.D. in physics from Yale University. In 1917, he began working at AT&T Bell Laboratories, where he conducted research in various areas, including telegraphy, thermal noise, and sampling theory.

In 1928, Nyquist published a groundbreaking paper titled “Certain Topics in Telegraph Transmission Theory,” which laid the foundation for his famous sampling theorem. In this paper, Nyquist investigated the problem of signal distortion in telegraphy and developed a mathematical framework for understanding how signals could be transmitted without loss of information. This early work, combined with further research by Claude Shannon in the 1940s, would eventually lead to the formulation of the Nyquist-Shannon sampling theorem.

The Nyquist-Shannon Sampling Theorem

The Nyquist-Shannon sampling theorem, established in its final form by Claude Shannon in 1949, states that a continuous signal can be accurately reconstructed from its discrete samples if the sampling rate is at least twice the highest frequency present in the signal. In other words, to capture all the information in a continuous signal without introducing aliasing (undesirable artifacts caused by under-sampling), the signal must be sampled at a rate that is at least double its highest frequency component. This critical frequency is referred to as the Nyquist frequency.

The Impact of the Nyquist Theory on Modern Digital Audio

The Nyquist theory has had a profound influence on the development of digital audio technology, as it provides a fundamental guideline for determining the appropriate sampling rate for converting analog audio signals into digital representations.

- Establishing the CD Audio Standard: One of the most notable applications of the Nyquist theory is the establishment of the Compact Disc (CD) audio standard. The human auditory range extends approximately from 20 Hz to 20,000 Hz, so according to the Nyquist theorem, the sampling rate should be at least 40,000 Hz (40 kHz) to accurately represent this frequency range. The CD audio standard uses a sampling rate of 44.1 kHz, which comfortably exceeds the minimum requirement, ensuring accurate representation of the audible frequency spectrum.

- Developing High-Resolution Audio Formats: The Nyquist theory has also been instrumental in the development of high-resolution audio formats, such as 96 kHz/24-bit or 192 kHz/24-bit recordings. While the audible benefits of these higher sampling rates are still a subject of debate, they provide additional headroom and can capture ultrasonic frequencies, which may have subtle effects on the audible sound or be useful in specific applications like sound design or archiving.

- Guiding the Design of Analog-to-Digital and Digital-to-Analog Converters: The Nyquist theorem has informed the design of analog-to-digital converters (ADCs) and digital-to-analog converters (DACs), which are essential components in the conversion process between analog and digital audio signals. ADCs and DACs must be designed to operate at or above the Nyquist frequency to ensure accurate signal conversion without introducing aliasing.

- Influencing Audio Processing Techniques: The Nyquist theory has also influenced various audio processing techniques, such as oversampling and decimation. Oversampling is a technique where an audio signal is sampled at a rate significantly higher than the Nyquist frequency, allowing for more precise digital processing and reduced aliasing. After processing, the signal can then be downsampled (decimated) back to its original sample rate, which reduces the computational requirements for further processing or playback.

- Informing Audio Education and Best Practices: The Nyquist theory has become a fundamental concept taught in audio engineering and digital signal processing education. Understanding the theorem is crucial for audio professionals to make informed decisions about sampling rates, bit depths, and various processing techniques to ensure high-quality audio production.

The Nyquist theory has been a cornerstone in the development of modern digital audio technology. Its influence can be seen in the establishment of industry standards, the design of critical audio hardware components, and the development of advanced audio processing techniques. By providing a mathematical framework for understanding the relationship between sampling rate and frequency content, the Nyquist-Shannon sampling theorem has helped shape the way we capture, process, and reproduce sound in the digital era.

I always disliked math, because I was never as good at grasping the rigid bounds of it and always exceled at more creative endeavors such as writing, and music. Music is art and creativity, and it is ALSO MATH! I just can’t escape my old nemesis.

We know what sample rate, bit depth, bit rate, the Nyquist theory, and some history of how it came to be. Let’s take that knowledge and apply it to our own work in digital audio.

Choosing the Right Sample Rate and Bit Depth for Recording

Selecting the right sample rate and bit depth for recording music depends on your project’s specific needs and your goals for the final product. Here are some recommendations to help you make the best choice.

Selecting Sample Rate

For most music projects, a sample rate of 44.1 kHz or 48 kHz is sufficient. These sample rates offer a good balance between audio quality and file size, making them suitable for most applications. If you’re working on a project that requires a higher level of detail, such as classical music or sound design for film, you may want to consider using a sample rate of 88.2 kHz or 96 kHz. These higher sample rates can capture more of the subtle nuances in the audio, resulting in a more accurate digital representation.

When and Why a Higher Sample Rate is Beneficial

There are specific scenarios where using a higher sample rate can be advantageous. These situations include:

- High-frequency content: If your audio material contains a significant amount of high-frequency content, such as an orchestra or certain electronic sounds, a higher sample rate can help capture and reproduce these frequencies more accurately.

- Sound design and manipulation: In sound design or experimental music, where audio is heavily processed or manipulated, using a higher sample rate can help maintain the quality and integrity of the original sound source throughout the processing chain.

- Archiving and future-proofing: If you’re working on a project with a long shelf life, such as film scores or archival recordings, using a higher sample rate can help ensure that your audio remains compatible with future advancements in playback technology.

Remember, higher sample rates come with larger file sizes and increased processing requirements, so you’ll need to weigh the potential benefits against the practical implications for your specific project.

Selecting Bit Depth

When it comes to bit depth, I generally recommend using a 24-bit depth for recording. This provides a significantly higher dynamic range than 16-bit, allowing for greater flexibility during

the mixing and mastering process. A 24-bit recording can capture a wider range of loud and soft sounds without introducing unwanted noise or distortion. While 32-bit bit depth is available in some digital audio workstations, it’s unnecessary for most recording situations and can result in larger file sizes.

Selecting the Ideal Sample Rate and Bit Depth for Mixing and Mastering

Mixing and mastering are crucial stages in the audio production process, where balance, tonal quality, and overall loudness are fine-tuned to create a polished final product. Here are some guidelines for choosing the appropriate sample rate and bit depth during these stages.

Sample Rate For Mixing & Mastering

When it comes to mixing, it’s generally best to work at the same sample rate as your original recordings. This will help maintain consistency and avoid potential artifacts introduced by sample rate conversion. However, if you’re working with audio from multiple sources with different sample rates, you may need to choose a common sample rate for your project.

During the mastering process, you might consider up-sampling to a higher sample rate, especially if your final product will be distributed in a high-resolution audio format. However, be aware that up-sampling won’t improve the audio quality of your mix or master, because up-sampling only takes more snapshots of what was taken at the initial, lower, sample rate during recording.

Bit Depth For Mixing & Mastering

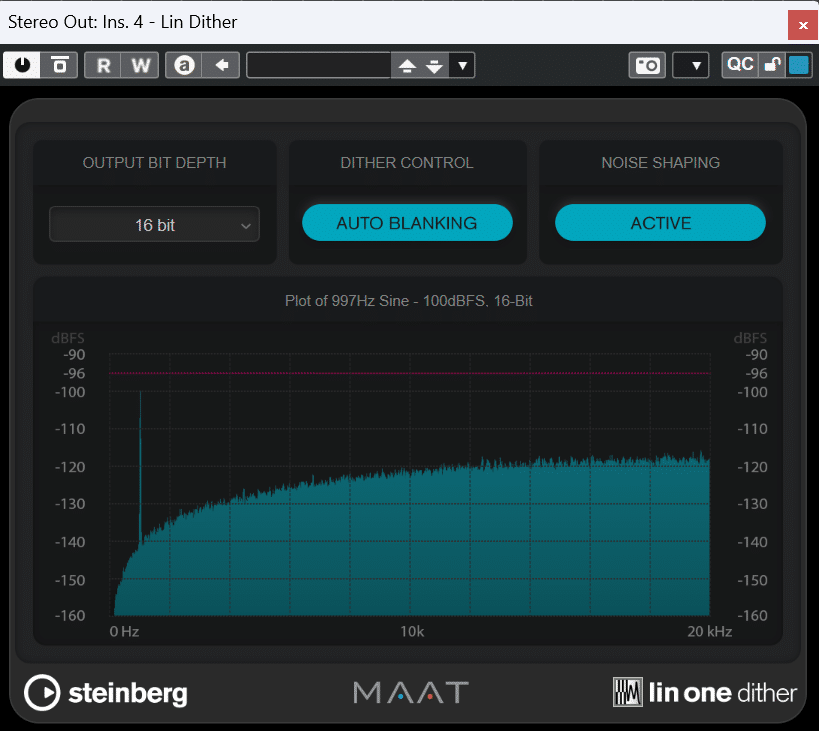

For mixing and mastering, I recommend working with a 32-bit floating-point bit depth, if your digital audio workstation supports it. This allows for greater headroom and dynamic range, which can be particularly beneficial during the mastering process. When you’re ready to export your final mix, you’ll need to dither and reduce the bit depth to 24-bit or 16-bit, depending on your intended distribution format.

Dither is something that is easy to gloss over, but it’s crucial to understand what it is and when to use it!

Dither

Dither is a technique used in digital audio processing to reduce the unwanted noise and distortion that can be introduced when reducing the bit depth of an audio file. Now, you might wonder why we would want to add noise to our audio. It seems counterintuitive, right? Well, let’s dive into the reasons behind it.

When we record or process audio digitally, we represent the audio signal with a certain number of bits. The more bits we use, the more accurate the representation of the audio. However, sometimes we need to reduce the bit depth, for example, when exporting a final mix or when working with older hardware or software that only supports lower bit depths. This process is called quantization, and it can introduce some errors, causing a type of distortion called quantization noise.

This is where dither comes in. Dither is a low-level noise added to the audio signal before quantization, which helps to randomize and spread out the quantization errors. By doing this, dither effectively masks the distortion and makes it less noticeable to our ears, which is a good thing! Our ears are more forgiving of low-level noise than they are of distortion, so the end result is a more pleasant listening experience.

So, when should you use dither? Typically, you’ll want to apply dither when you’re reducing the bit depth of your audio file, such as when you’re exporting your final mix from your digital audio workstation (DAW). Most DAWs have built-in dithering options that you can choose during the export process. It’s essential to note that you should only apply dither once at the very end of your audio processing chain. Applying it multiple times can accumulate noise, which is something we want to avoid.

We Could Go Even Deeper!

But, I’m not going to. This post has practically turned into a book! If you’ve made it this far, congratulations! I’m confident now that you have an understand of what sample rate is, what bit depth and bit rate are, the Nyquist theory, and dither. This will greatly help you level the quality of your recordings, and help throughout the mixing and mastering phase of your work as well.

If you’d like to apply this knowledge to leveling up your recordings, check out other posts on different ways to improve your recording game:

Record Vocals Like A Pro: 15 Steps for Professional Results

How To Properly Record Guitars

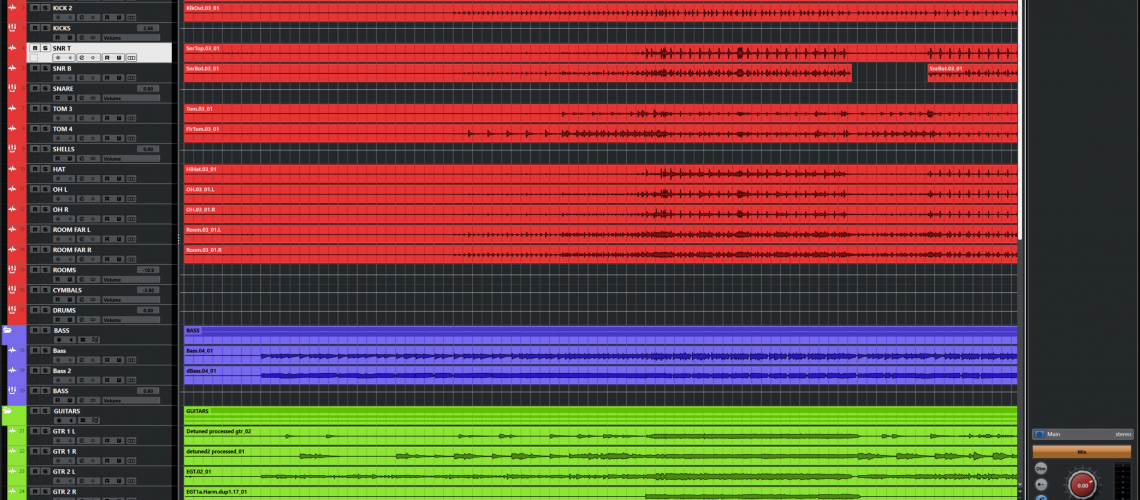

Sample rate and bit depth are the building blocks of digital audio, but it’s the mixing and mastering process that brings your music to life. With expert care, these technical details become part of a rich, compelling soundscape. At Twisby Records, we understand how to achieve that.

-John